23/01/24

AI risks in global health ‘must be accounted for’ – WHO

By: Abdallah Taha

Send to a friend

The details you provide on this page will not be used to send unsolicited email, and will not be sold to a 3rd party. See privacy policy.

Healthcare providers need to be aware of the risks of using AI – especially in low- and middle-income countries – the World Health Organization (WHO) said.

Large multi-modal models (LMMs), a type of generative AI which has wide applications across healthcare, could transform global health outcomes, vastly improving diagnostics and treatments, according to the WHO.

The technology has been adopted faster than any consumer application in history, the UN agency says, citing the recent emergence of ChatGPT, Bard and Bert platforms.

“Generative AI technologies have the potential to improve health care but only if those who develop, regulate and use these technologies identify and fully account for the associated risks.”

WHO chief scientist Jeremy Farrar

“We’re all stepping into a new era of responsible AI use in public health and clinical medicine,” Alain Labrique, WHO director of digital health and innovation, told a press briefing on Thursday (18 January).

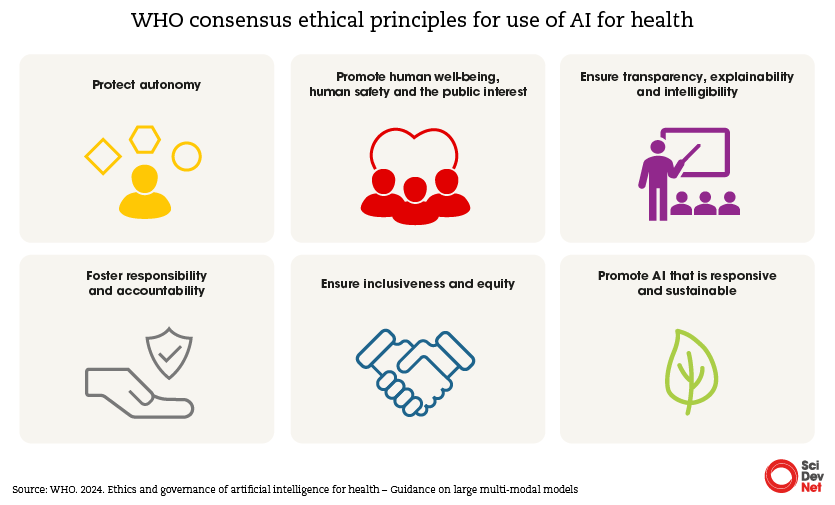

The WHO has produced new guidance on the ethics and governance of LMMs, which build on the technology used for text-based models such as ChatGPT. They can accept multiple data inputs such as text, videos, and images to generate different outputs.

“The principles and recommendations within this document pave the way for a future where AI contributes to the wellbeing of humanity, adhering to the highest ethical standards,” Labrique said.

The technology has widespread uses within healthcare, including diagnosis, scientific research and drug development, medical training, administration, and for use by patients themselves to assess symptoms.

AI systems could be deployed to analyse huge amounts of medical data, including images, scans, and electronic health records, in order to provide diagnoses and bespoke treatment plans – and even predict patient outcomes.

It has the potential to save lives, improve healthcare, and make it more efficient, freeing doctors from routine tasks and paperwork.

Philippe Gerwill, a corporate advisor for AI 2030, an initiative geared towards responsible AI, told SciDev.Net: “In regions experiencing a scarcity of medical practitioners, LMMs stand out as a pivotal tool in augmenting healthcare accessibility.

“By enhancing the productivity of healthcare workers, these machines can mitigate the impact of doctor shortages, ensuring broader and more equitable access to medical care.”

However, alongside these potential benefits lie significant risks that must be carefully considered, the WHO warns.

“Generative AI technologies have the potential to improve healthcare, but only if those who develop, regulate and use these technologies identify and fully account for the associated risks,” said WHO chief scientist Jeremy Farrar.

“We need transparent information and policies to manage the design, development and use of LMMs.”

Misdiagnosis

Overestimating the capabilities of LMMs without acknowledging their limitations could lead to dangerous misdiagnoses or inappropriate treatment decisions, the WHO says.

One risk is the dependence of healthcare systems on LMMs that are not properly maintained or updated, particularly in low- and middle-income countries.

The reliance on LMMs could also lead to job losses and require significant retraining for healthcare workers.

“There is no free lunch,” Rohit Malpani, of the WHO’s research for health department, told the briefing. “There are different risks associated with each use case.”

“There are also broader health systems and societal risks.”

The training and use of these powerful models also come with a substantial environmental cost, in terms of carbon emissions and water consumption.

In addition, the WHO raises concerns about their development and deployment being concentrated in the hands of large tech companies, due to the financial costs of training and maintaining them.

“These models, whatever the benefits, may reinforce and expand their power and dominance versus governments and health systems,” added Malpani.

Inequalities

The WHO also raises issues around equality of access to these models, which could be limited by the digital divide and high subscription fees, exacerbating existing health inequalities between developed and developing countries.

Furthermore, LMMs trained on biased data could perpetuate these biases within healthcare systems.

A major challenge lies in building the necessary infrastructure and regulating the use of AI across public and private sectors.

“It’s really critical that we recognise the variances that exist across countries and in the availability of robust data,” said Labrique, adding: “We’re working very closely to enable countries to ensure that they have the good governance needed to shepherd and steward the use of AI in the health sector.”

However, Gerwill at AI 2030 thinks there is more to be done.

“Initiatives such as providing grants, access to shared cloud computing resources, and open datasets could be immensely beneficial,” he said.

He said international bodies can level the playing field by enabling low- and middle-income countries to acquire the computational power, data, and expertise needed for the development and application of LMMs.

International organisations can facilitate knowledge transfer through events and information platforms, says Gerwill, as well as supporting countries with local data, which is crucial for these models to reflect regional needs and contexts more accurately.

“Championing inclusive development is important,” he said. “It is vital for international organisations to ensure that stakeholders from nations with fewer resources are actively involved in the development and governance processes of new LMM technologies.”

Ultimately, the WHO recognizes that some harm from AI is inevitable. Its guidance offers recommendations on liability schemes and calls for compensation mechanisms, in the event that patients suffer as a result of AI.

“Implementing comprehensive liability regulations and regulatory oversight is essential,” agrees Gerwill.

“It is imperative for regulators to establish clear liability norms to guarantee that individuals adversely affected by an LMM receive adequate compensation and legal recourse.”

This piece was produced by SciDev.Net’s Global desk.