By: Sharon Dunwoody

Send to a friend

The details you provide on this page will not be used to send unsolicited email, and will not be sold to a 3rd party. See privacy policy.

How can reporters get to the ‘truth’ in science? Sharon Dunwoody says following the ‘weight of evidence’ avoids bias.

Let me start with an audacious assertion: A major problem confronting science journalists is that they have trouble communicating what’s true. Even when competing claims are wildly lopsided — think climate change, for example — science journalists feel that they cannot tell their audience that one of the claims is surviving scientific scrutiny better than others.

If I can convince you that this problem is real, I then want to offer a better strategy for handling it: a tactic that I call ‘weight-of-evidence’ reporting.

Accurate, but not necessarily true

But first, the problem. Accuracy has long been a crucial standard in journalism, and it is a noble goal. But accurate is not a synonym for true.

Usually, ‘accuracy’ reflects the ‘goodness of fit’ between what a source tells the journalist and what he or she writes: not an independent judgment on whether that assertion is true or not. Put another way, accuracy more often reflects ‘reliability’ (how well the reporter captures the gist of what the scientist said) than ‘validity’ (whether the assertion made by the source is likely to be true).

Why is validity given short shrift? Science journalists take pride in helping audiences sift the wheat from the chaff of scientific claims. But several factors get in the way of establishing the truth.

“While the journalist may be sending a legitimate message — ‘the truth is in here somewhere’ — studies of audience reactions indicate that they, unfortunately, are taking home a very different message: ‘no one knows what’s true’. ”

Sharon Dunwoody

One is that no single journalist knows enough to evaluate most truth claims. If an engineering firm issues a press release announcing that it has developed solar panels capable of capturing four times more of the sun’s energy that the typical silicon panel, a reporter likely will have neither the skills nor the time to burrow into the peer-reviewed literature to assess the quality of the evidence.

Experienced journalists will often handle that problem by talking to other scientists. While this may help judge a claim’s veracity, it does not solve a bigger problem.

When editors or audiences detect what they believe to be the reporter’s opinion in a story, that journalist risks being accused of bias. Indicating which scientific claims are more likely to be true sets a journalist up for a serious credibility problem.

So what is a journalist to do? Well, one time-honoured surrogate for making validity claims is to ‘balance’ competing claims. If the journalist cannot say which among a series of claims is likely to be true, they can at least offer the range — the climate scientist and the climate skeptic, the ‘he said/she said’ format — leaving the audience to reach their own conclusion.

But while the journalist may be sending a legitimate message — ‘the truth is in here somewhere’ — studies of audience reactions indicate that they, unfortunately, are taking home a very different message: ‘no one knows what’s true’. And that, I would argue, is an inaccurate and unacceptable outcome.

Weigh up the support

Let me offer one possible escape hatch: weight-of-evidence reporting.

This strategy does not call on science journalists to evaluate the scientific evidence for and against claims, nor to make an assertion about what’s true. Rather, it asks journalists to share where scientist-sources fall along that continuum of truth claims.

With this approach a reporter would ascertain not which truth claim is most likely to be valid, but which has garnered the most support from the scientists qualified to vet it. And in writing a story they can then make a statement about what’s true while lowering the risk of being perceived as unfair or biased. The journalist retains an ‘informing’ role, but by telling the audience that most of the scientists studying the issue ‘land here rather than there’, their story can also make a clear validity statement about which ‘truth’ is likely to hold over time.

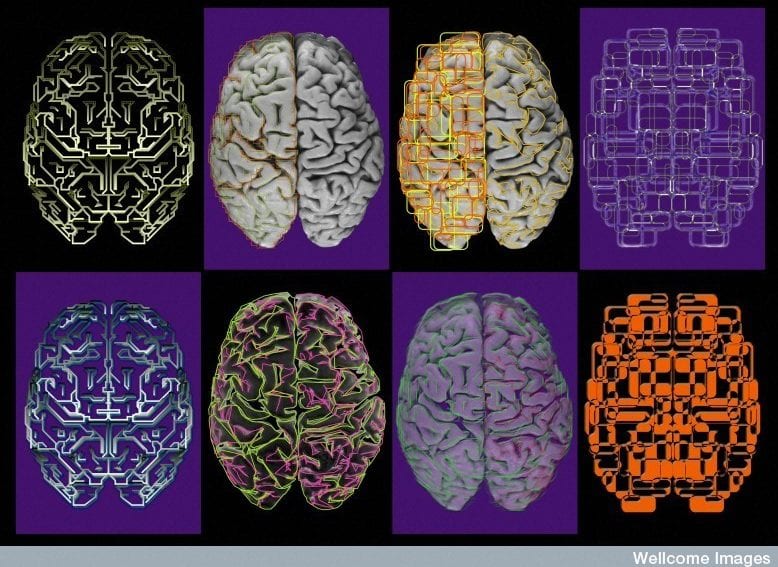

With a research group at the University of Wisconsin-Madison, USA, I have tested this idea, with promising results. We chose a debate about how the brain comes to recognise faces. It pits a theory that the skill resides in a specialised part of the brain against one that argues recognition is the result of practice — that the brain just gets better at facial recognition over time.

At the time of the experiment, the theory of a specialised brain region had most scientific support. We exposed readers in the United States to online science stories, half of which made that weight-of-evidence assertion at the top and half that simply laid out the two claims. They were split into two groups, where each saw one or the other type of story. Then we asked our respondents which of the two theories scientists thought was true, and which they themselves thought was true.

As expected, those who read the ‘weight-of-evidence’ stories were more likely to tell us that scientists thought the specialised brain area hypothesis was true. And that conclusion about the scientists meant that those readers were also more likely to believe it themselves. So the weight-of-evidence text had a direct effect on respondents’ judgments about scientists’ beliefs and an indirect effect on their own judgments.

One experiment proves little, of course, but other science communication researchers are also exploring this process — and it will be important to also check whether it holds true in other cultures.

Sharon Dunwoody is Evjue-Bascom Professor Emerita in the School of Journalism and Mass Communication at the University of Wisconsin-Madison, USA. She can be contacted at [email protected]