By: David Dickson

Send to a friend

The details you provide on this page will not be used to send unsolicited email, and will not be sold to a 3rd party. See privacy policy.

Fears that the US government may place excessively heavy restrictions on those working with the SARS virus highlight the need to ensure that concerns about the potential misuse of biological organisms are not allowed to impede important research.

One of the most impressive aspects of the worldwide response to last year's outbreak in East Asia of severe acute respiratory syndrome (SARS) was the speed with which both the scientific and medical communities were able to identify and contain the problem. A previously unknown disease that, if unchecked, could well have killed millions caused, in the end, no more than several hundred deaths. And this achievement was due in part to the extent to which scientists around the world, led by the World Health Organisation, were able to engage in a vast collaborative effort to get a handle on the epidemic.

It is, therefore, alarming to hear that government officials in the United States are considering placing the SARS virus on a list of 'select agents' that can only be handled in facilities that have stringent security measures. These include both physical security procedures — such as 24-hour guards and special locks on laboratories — as well as background checks on staff and severe limitations on the involvement of foreign researchers (see Scientists call on US to keep SARS studies unrestricted).

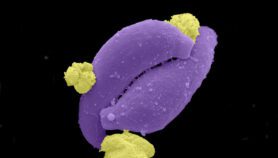

No one denies that the highly infective virus needs to be handled with great care; various outbreaks this year, one in Taiwan and the second in China, have been associated with laboratories that were studying the disease, and where safety precautions were clearly inadequate. Equally, there is evidence that the strict application of basic hygienic practices by hospitals involved in treating those diagnosed with the disease was one of the most effective measures responsible for stopping the epidemic.

The concern, however, is that in their zeal to be seen to be meeting public concerns about potentially lethal biological agents getting into the hands of terrorist groups, the US authorities may end up over-reacting. By doing so, they could jeopardise the involvement of US scientists in the search for a treatment for the disease and for an effective vaccine, both of which have yet to be found. And this in turn could prolong the successful achievement of either goal.

Bioterrorism concerns

Of course, the issues raised are not restricted to the SARS virus. There has been growing concern in recent years about the possibility of lethal biological agents falling into the wrong hands, and being used as weapons either by terrorist groups, or even individuals. Several incidents have underlined the potential dangers, in particular those in the United States in which live anthrax virus was sent through the mail. And fears that terrorists — unlike warring nations — are unlikely to have moral qualms about using such strategies indiscriminately against civilian groups or populations have only increased levels of concern in official circles.

In such situations, it is certainly legitimate for governments to take steps to protect their citizens; hence the recent introduction of increased security procedures designed to make it more difficult for unauthorised individuals to gain access to laboratories in which biological agents are being studied. The fear, obviously, is that even a small sample of a pathogenic organism could be sufficient to cause damage if released maliciously.

The difficulty lies in assessing when such restrictions become excessive; in other words, when the costs involved in applying them become heavier than any benefits that can be anticipated. This is particularly the case in so-called 'dual use' research, which is primarily directed at humanitarian ends (as is the case with much basic research on pathogens and infectious agents), but where the biological material being studied also has potential dangerous uses. Here the threat is that an excessively heavy-handed approach could have a chilling effect on research, undermining efforts to develop effective diagnosis and treatment (including vaccines).

Sadly there is anecdotal evidence that this may already be happening. Some researchers specialising in pathogenic organisms, for example, are said to be deciding to destroy their research samples rather than pay for the expensive new security measures required to keep them in storage. And it is already clear that the new restrictions being placed on sending certain types of biological samples through the mail are becoming a serious impediment to international research collaboration.

Would a code of conduct help?

Such issues are being addressed increasingly seriously by the US scientific community. For example a report, Biotechnology Research in an Age of Terrorism, published last year by a committee of the National Academy of Sciences spoke openly of the need for a "balanced approach" to mitigate threats from bioterrorism without impeding progress in biotechnology. The concern of scientists is that an unthinking, heavy-handed approach, designed as much to counter public fears whipped up by media hype as to reflect a calm assessment of the reality of the situation, may lead to measures that could limit their ability to fully back efforts to combat some of the world's most dangerous diseases.

What are the options? Some have argued that self-policing is the appropriate response; for example, that all scientists engaged in the life sciences should be persuaded to sign up to a code of conduct (much as all physicians are required to take the Hippocratic Oath promising to defend human life). Indeed, the idea that an internationally-approved code of this nature could help control the spread of biological weapons, while ensuring an appropriate balance between the interests of science and security, is currently on the agenda for international discussions surrounding progress on implementation of the Biological and Toxic Weapons Convention. The issue is due to be addressed explicitly next year, under the leadership of the United Kingdom.

The advantage of a code of conduct this is that it puts responsibility back on the shoulders of the individual researcher to prevent his or her research being misused, rather than delegating this to a government authority. The disadvantage is that, in the absence of a licensing system for professionals, it is difficult to see what would give it teeth. Furthermore, those determined to misuse biological data for ideological or criminal ends are unlikely to worry about breaking an ethical code in doing so.

Preventative measures

Nevertheless it is important to stimulate debate about this issue – and to explore movement in this direction. One need for example, is to reassert the benefits of full, open and international exchange of scientific data, particularly in the biomedical field. Sadly there are reports that even Chinese researchers have not been as free to share data about SARS as they might have been (either internationally, or within their own country). Restricting access to data may have the short-term economic benefit of raising one's chances of obtaining a patent, or even raising one's own professional profile. But when speed is required and human lives are at stake, it can be a shortsighted strategy.

Secondly, even without an official code of conduct, more can and should be done to make all those engaged in the life sciences aware of the potential misuses of their research. Informed vigilance, if it can be made to work, is preferable to an uninformed strategy imposed from outside. And awareness of the need for vigilance is likely to begin within the educational system, where teaching students about the broader implications of their disciplines could be the best way of enabling them to see their work in its proper context — including both beneficial and potentially dangerous consequences. Such concerns are at the centre of a campaign recently launched by the International Committee of the Red Cross designed to ensure that all life scientists, in developed and developing countries alike, become more aware of the need to take direct responsibility for their actions.

Finally, the scientific community should place robust demands on government authorities to demonstrate that, where tough restrictions are applied, these are fully justified by the circumstances — and that sufficient consideration has been applied to ensure that the benefits outweigh the costs. Sadly there is evidence that, in some of the United States' recent moves (such as the new visa application requirements for scientists and researchers wishing to enter the country) such analysis has not been as robust, or convincing as it should have been. If this remains the case, not only the biomedical research community, but those it seeks to serve, will pay the price.