Send to a friend

The details you provide on this page will not be used to send unsolicited email, and will not be sold to a 3rd party. See privacy policy.

The outbreak of Rift Valley fever in Uganda holds important lessons for current thinking about investment in research for global development.

What is happening in Uganda is reasonably familiar — similar lessons have come up before. Epidemiological control measures designed to limit spread of disease do not fully consider the reality for affected people. In this case, what are poor rural communities to eat, or farm, if dairy and meat sales are banned? Also the official communication channels used to raise the public health alarm are not the community’s most trusted opinion leaders.

In discussions around science for development, there are frequent mentions of contextualising research so it has an impact, and the value of this seems obvious. So why do these mistakes continue to happen?

The question is particularly urgent not only because livelihoods and lives are at stake, but also because trends in research funding appear likely to increase the frequency of such mistakes. Ironically, it is development agencies’ growing concern with impact that is exacerbating the constraints to learning what works.

Impact focus

The 2008 financial crisis brought into focus two narratives on public investment in research. The first is that evidence-informed policy and practice makes for more cost-effective programmes. The second is that knowledge economies drive economic growth, and require sustained investment into the innovation pipeline. The intersection and driving force of both narratives is expressed in funders’ concern with impact and national interests.

The evidence of this shift is increasingly ubiquitous. In the United Kingdom, the Higher Education Funding Council for England increased the role of ‘impact’ in determining its levels of research funding of English universities in 2011: a larger percentage of research quality criteria are now based on impact.

There are signs of the shift in the development sector as well. In 2012, the Dutch government announced a restructure focused on ‘knowledge platforms’, with each featuring a different theme (such as inclusive policies or food and business). The idea is to make sure all research investment is funneled into a specific priority sector.

“The irony is that investing in systems sustains efficiency in research spending. It is, in fact, through independent knowledge brokers and experts on research uptake that we learn most effectively about what works when research gets put to use.”

Nick Ishmael Perkins

The Canadian agency, the International Development Research Centre, is under pressure to scrutinise its research portfolio and become more poverty focused, along the lines of what happened with the UK Department for International Development (DFID) some time ago.

Harmful expectations

To be clear, the mainstreaming of funders’ concern with research impact is a welcome development. A SciDev.Net survey in 2012 found that more than 80 per cent of NGOs do not systematically use research to inform their policy and practice. Even bilateral research funders such as DFID and the Swedish International Development Cooperation Agency have previously struggled to get parts of their respective organisations to use the research they fund.

The problem with the recent focus on research impact is the expectations and assumptions about how it works, particularly in relation to poverty reduction.

The most common expectation is that research will lead to a specific and immediate policy response. It is an idea that has appeal as a political soundbite. But in practice it means that any measure of impact that falls short of that goal may be dismissed. This is particularly problematic because it renders irrelevant the process of sustained social change, which is slow and complex.

When research uptake is conceived of in this way, it underestimates the agency of research users and the complexity of their environment, and assumes they are merely awaiting instruction in the form of study findings. In the case of Rift Valley fever in Uganda, the pastoralists just need to be told not to touch the meat, the assumption goes, and we can contain the spread of disease. We’ve seen how well that worked.

Misguided communication funding

Another concern is that this focus on impact discourages innovation and experimentation. The stakes have become too high to risk failure.

The biggest concern, however, is that the push for impact has made research more producer-focused. Funders are encouraging researchers to allocate resources for communication, so funds for uptake increasingly go through the commissioned research projects themselves. This is an understandable instinct from a funder’s perspective as it demonstrates a level of mainstreaming that makes a portfolio look progressive. The result is that money goes to research producers, not organisations that support its use.

The problem becomes clear when looking at this from the users’ perspective.

Thousands of high quality research programmes are funded every year, targeting a limited and highly prized group of policymakers. To return to the Rift Valley fever example: communicating the research to them would be comparable to pastoralists being inundated with public health messages about managing their livestock, which are not necessarily coordinated.

Clearly what is required is a bit of intelligent brokering of the research, with trusted information sources acting as mediators between knowledge producers and those most affected by the disease.

Thinking this through from the demand side should underscore the value of research systems: the capabilities needed to deliver on the assumed benefits of research. They are local infrastructures and relationships that build both an understanding of users’ needs and ability to act on new knowledge.

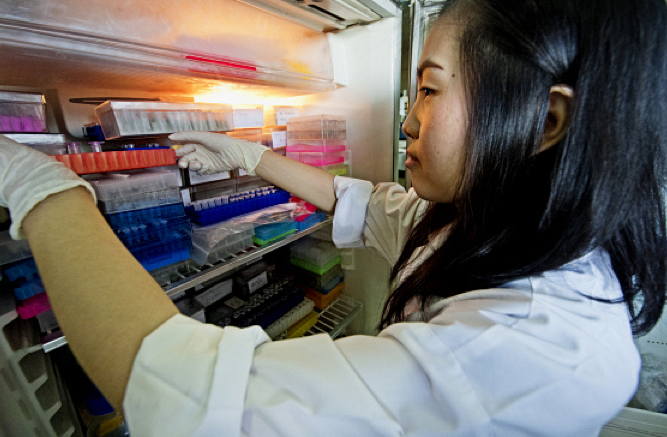

Those of us in this line of work call this assessing and contextualising the research, and mobilising the demand. It cannot be reasonably delivered by any single research project. The rise in health systems research stems from a similar realisation — in that case, that health outcomes depend on more than the development of the right drug.

In the current drive for impact, spending on such systems is seen increasingly as a luxury. For instance, the British government just cancelled a funding call for building the capacity of knowledge brokers, saying it was not enough of a high-level objective under a new aid strategy that allocates at least £2.5 billion (US$3.6 billion) to commissioning new research. SciDev.Net has noticed this attitude in a number of funders, not just DFID.

The irony is that investing in systems sustains efficiency in research spending. It is, in fact, through independent knowledge brokers and experts on research uptake that we learn most effectively about what works when research gets put to use.

Everyone who invests in research in a marginalised community should learn from the current problems with containing Rift Valley fever.

Nick Perkins is the director of SciDev.Net. @Nick_Ishmael

SciDev.Net led a consortium that applied for the DFID Improving Communication of Research Evidence for Development (ICRED) programme.