By: Natalie Heng

Send to a friend

The details you provide on this page will not be used to send unsolicited email, and will not be sold to a 3rd party. See privacy policy.

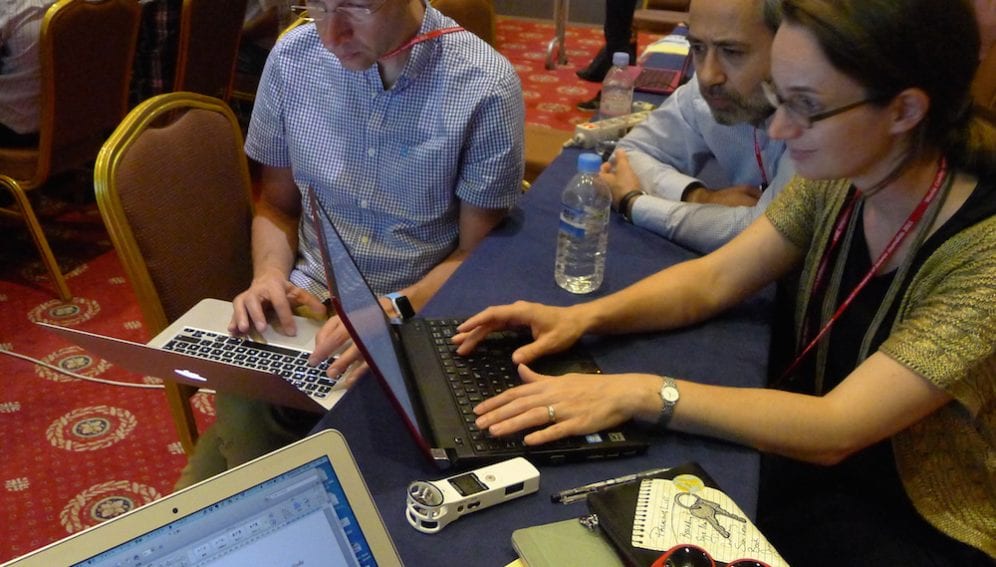

[SEOUL] At the World Conference of Science Journalists in Seoul, South Korea this week, a roomful of science communicators are trying to figure out how many US National Security Agency-funded research papers are available on Google Scholar.

“This is not public information,” John Bohannon, our workshop instructor informs us.

But sometimes even classified information leaves a paper trail. With a few advanced Google search options and a little bit of common sense, most of us eventually figure out that “MDA904” is a NSA grant code prefix, and that including Google quotation marks make all the difference.

“Journalists have a long tradition of disdain for numbers, but that’s changing.”

By Jonathan Stray

John Bohannon is a regular contributor to publications like Science and Wired who is known for investigative pieces that make use of large-scale data analysis. He is at the forefront of a movement that combines the literacy of a computer scientist and the mind of a reporter to make sense of untapped data in today's digital era.

Tricks like “web-scraping” — mining the internet for useful information — don’t have to involve complicated code, he says. All you need is a few Google search terms and an Excel spreadsheet.

Programs like iPython will capture source code for web pages, and let you feed uncategorized data into a program for conversion into tables for analysis such as police crime records which through very basic programming anyone can convert it into charts, tables and the like.

All this is part of a revolution some are calling “data-jitsu”.

Jonathan Stray, one of the facilitators at Bohannon's workshop, is also a freelance journalist and computer scientist who teaches “computational journalism” at Columbia University in the US. He thinks data literacy is the future of journalism — especially investigative journalism.

“Journalists have a long tradition of disdain for numbers, but that’s changing,” Stray says.

"Because our job isn’t really [just] writing. It’s analysis and communication, and getting information. That requires data literacy,” he notes. "In fact, I don’t think you can do investigative journalism without data work. Too many of the questions we want to ask are quantitative questions."

At any rate, interpreting and communicating data is a basic skill valuable nowadays to researchers, policymakers and business.

This article has been produced by SciDev.Net's South-East Asia & Pacific desk.